Over the past couple of years, several of us have dedicated a lot of time to Chrome’s timer system. Because we do things a little differently, this has raised some eyebrows. Here is why and what we did.

Goal

Our goal was to have fast, precise, and reliable timers. By “fastâ€, I mean that the timers should fire repeatedly with a low period. Ideally we wanted microsecond timers, but we eventually settled for millisecond timers. By “preciseâ€, I mean we wanted the timer system to work without drift – you should be able to monitor timers over short or long periods of time and still have them be precise. And by “reliableâ€, I mean that timers should fire consistently at the right times; if you set a 3.67ms timer, it should be able to fire repeatedly at 3.67ms without significant variance.

Why?

It may be surprising to hear that we had to do any work to implement these types of timers. After all, timers are a fundamental service provided by all operating systems. Lots of browsers use simpler mechanisms and they seem to work just fine. Unfortunately, the default timers really are too slow.

Specifically, Windows timers by default will only fire with a period of ~15ms. While processor speeds have increased from 500Mhz to 3Ghz over the past 15 years, the default timer resolution has not changed. And at 3GHz,15ms is an eternity.

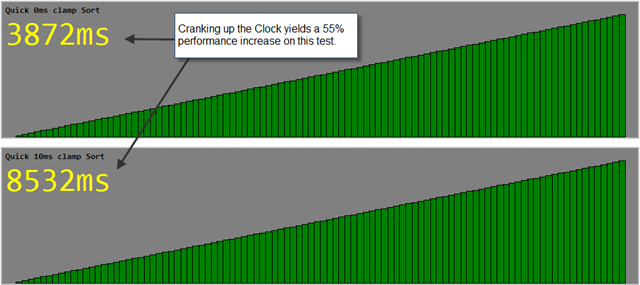

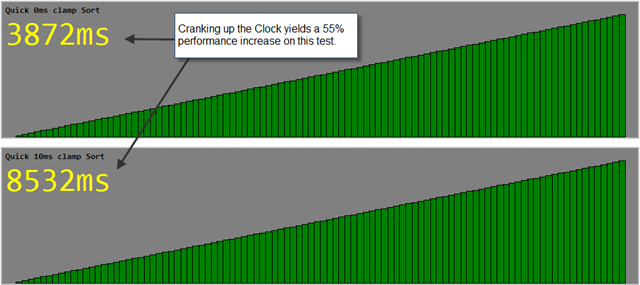

This problem does affect web pages in a very real way. Internally, browsers schedule time-based tasks to run a short distance in the future, and if the clock can’t tick faster than 15ms, that means the application will sleep for at least that long. To demonstrate, Erik Kay wrote a nice visual sorting test. Due to how Javascript and HTML interact in a web page, applications such as this sorting test use timers to balance execution of the script with responsiveness of the webpage.

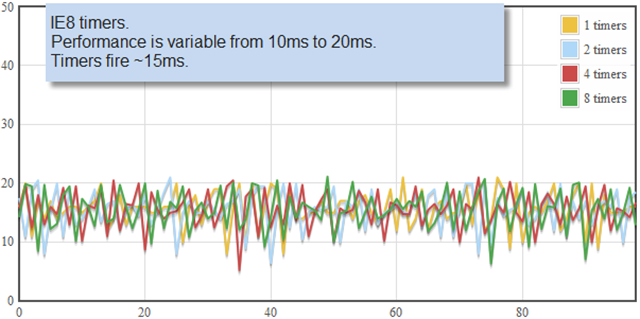

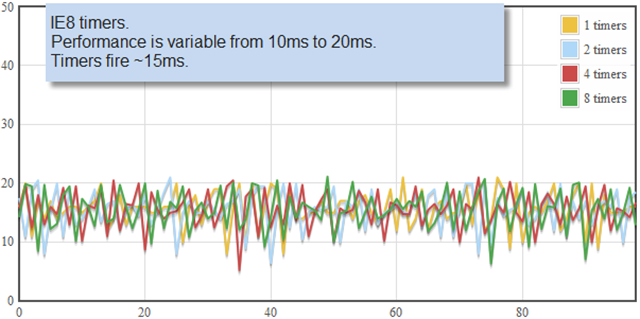

John Resig at Mozilla was also wrote a great test for measuring the scalability, precision, and variance of timers. He conducted his tests on the Mac, but here is a quick test on Windows.

In this chart, we’re looking at the performance of IE8, which is similar to what Chrome’s timers looked like prior to our timer work. As you can see, the timers are slow and highly variable. They can’t fire faster than ~15ms.

A Seemingly Simple Solution

Internally, Windows applications are often architected on top of Event Loops. If you want to schedule a task to run later, you must queue up the task and wake your process later. On Windows, this means you’ll eventually land in the function WaitForMultipleObjects(), which is able to wait for UI events, file events, timer events, and custom events. (Here is a link to Chrome’s central message loop code) By default, the internal timer for all wait-event functions in Windows is 15ms. Even if you set a 1ms timeout on these functions, it will only wake up once every 15ms (unless non-timer related events are pumped through it).

To change the default timer, applications must call timeBeginPeriod(), which is part of the multimedia timers API. This function changes the clock frequency and is close to what we want. Its lowest granularity is still only 1ms, but that is a lot better than 15ms. Unfortunately, it also has a a couple of seriously scary side effects. The first side effect is that it is system wide. When you change this value, you’re impacting global thread scheduling among all processes, not just yours. Second, this API also effects the system’s ability to get into it’s lowest-power sleep states.

Because of these two side effects, we were reluctant to use this API within Chrome. We didn’t want to impact any process other than a Chrome process, and all of the possible impacts of the API were nebulous. Unfortunately, there are no other APIs which could make our message loop work quickly. Although Windows does have a high-performance cycle counter API, that API is slow to execute1, has bugs on some AMD hardware2, and has no effect on the system-wide wait functions.

Justifying timeBeginPeriod

At one point during our development, we were about to give up on using the high resolution timers, because they just seemed too scary. But then we discovered something. Using WinDbg to monitor Chrome, we discovered that every major multi-media browser plugin was already using this API. And this included Flash3, Windows Media Player, and even QuickTime. Once we discovered this, we stopped worrying about Chrome’s use of the API. After all – what percentage of the time is Flash open when your browser is open? I don’t have an exact number, but it’s a lot. And since this API effects the system globally, most browsers are already running in this mode.

We decided to make this the default behavior in Chrome. But we hit another roadblock for our timers.

Browser Throttles and Multi-Process

With the high-resolution timer in place, we were now able to set events quickly for Chrome’s internals. Most internal delayed tasks are long timers, and didn’t need this feature, but there are a half dozen or so short timers in the code, and these did materially benefit. Nonetheless, the one which matters most, the timer stall for the browser’s setTimeout and setInterval functions did not yet benefit. This is because our WebKit code (and other browsers do this too) was intentionally preventing any timer sustaining a faster than 10ms tick.

There are probably several reasons for the 10ms timer in browsers. One was simply for convention. But another is because some websites are poorly written, and will set timers to run like crazy. If the browser attempts to service the timers, this can spin the CPU, and who gets the bug report when the browser is spinning? The browser vendor, of course. It doesn’t matter that the real bug is in the website, and not the web browser, so it is important for the browser to address the issue.

But the 3rd, and probably most critical reason is that most single-process browser architectures can become non-responsive if you allow websites to loop excessively with 0-millisecond delays in their JavaScript. Remember that browsers are generally written on top of Event Loops. If the slow JavaScript interpreter is constantly scheduling a wakeup through a 0ms timer, this clogs the Event Loop which also processes mouse and keyboard events. The user is left with not just a spinning CPU, but a basically hung browser. While I was able to reproduce this behavior in single-process browsers, Chrome turned out to be immune – and the reason was because of Chrome’s multi-process architecture. Chrome puts the website into a separate process (called a “rendererâ€) from the browser’s keyboard and mouse handling process. Even if we spin the CPU in a renderer, the browser remains completely responsive, and unless the user is checking her Task Manager, she might not even notice.

So the multi-process architecture was the enabler. We wrote a simple test page to measure the fastest time through the setTimeout call and verified that a tight loop would not damage Chrome’s responsiveness. Then, we modified WebKit to reduce the throttle from 10ms to 1ms and shipped the world’s peppiest beta browser: Chrome 1.0beta.

Real World Problems

Our biggest fear with shipping the product was that we would identify some website which was spinning the CPU and annoying users. We did identify a couple of these, but they were with relatively obscure sites. Finally, we found one which mattered – a small newspaper known as the New York Times. The NYTimes is a well constructe site – they just ran into a little bug with a popular script called prototype.js, and this hadn’t been an issue before Chrome cranked up the clock. We filed a bug, but we had to change Chrome too. At this point, with a little experimentation we found that increasing the minimum timer from 1ms to 4ms seemed to work reasonably well on most machines. Indeed, to this day, Chrome still uses a 4ms minimum tick.

Soon, a second problem emerged as well. Engineers at Intel pointed out that Chrome was causing laptops to consume a lot more power. This was a far more serious problem and harder to fix. We were not concerned much about the impact on desktops, because Flash, Windows Media Player, and QuickTime, were already causing this to be true. But for laptops, this was a big problem. To mitigate, we started tapping into the Windows Power APIs, to monitor when the machine is running on battery power. So before Chrome 1.0 shipped out of beta, we modified it to turn off fast timers if it detects that the system is running on batteries. Since we implemented this fix, we haven’t heard many complaints.

Results

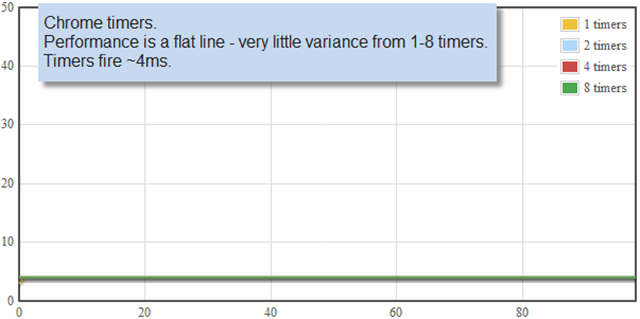

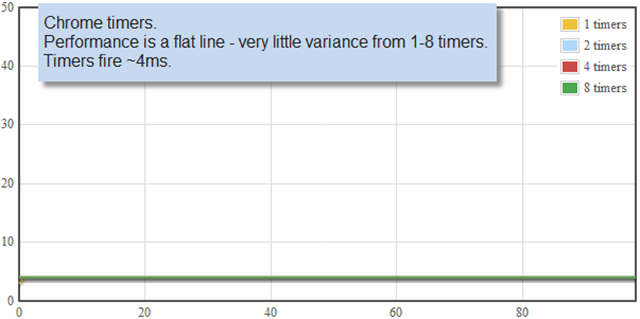

Overall, we’re pretty happy with the results. First off, we can look at John Resig’s timer performance test. In contrast to the default implementation, Chrome has very smooth, consistent, and fast timers:

Finally, here is the result at the Visual Sorting Test mentioned above. With a faster clock in hand, we see performance doubles.

Future Work

We’d still like to eliminate the use of timeBeginPeriod. It is unfortunate that it has such side effects on the system. One solution might be to create a dedicated timer thread, built atop the machine cycle counter (despite the problems with QueryPerformanceCounter), which wakens message loops based on self-calculated, sub-millisecond timers. This sounds trivial, but if we forget any operating system call which is stuck in a wait and don’t manually wake it, we’ll have janky timers. We’d also like to bring the current 4ms timer back down to 1ms. We may be able to do this if we better detect when web pages are accidentally spinning the CPU.

From the operating system side, we’d like to see sub-millisecond event waits built in by default which don’t use CPU interrupts or otherwise prevent CPU sleep states. A millisecond is a long time.

1. Although written in 2003, the data in this article is still relatively accurate: Win32 Performance Measurement Options.

2. http://developer.amd.com/assets/TSC_Dual-Core_Utility.pdf

3. Note: The latest versions of Flash (10) no longer use timeBeginPeriod.

NOTE: This article is my own view of events, and do not reflect the views of my employer.

Chrome turns 2 today

Chrome turns 2 today