With my work on SPDY, I often am asked about whether requiring TLS (aka SSL) is a good idea or not. Since SPDY is supposed to be about low-latency performance, how does something like TLS, which is traditionally thought to be slow, wedge it’s way into the design?

The answer is simple: users want it.

But how did we get here anyway?

If I told you that I was building a house with no locks, surely you’d ask me to install locks. Then, if I grumpily installed a lock only on the front door, you’d tell me to go install locks on all the doors. But this is essentially the web we have today. When you’re using TLS, nobody can get through your front door. But when you’re not using TLS, potential attackers can easily see exactly what you’re doing on every website you visit. How is it that we’ve built a house without locks?

When the web was invented in the early 1990’s, there was little need for locks. It was about sharing information, not purchasing your new TV or sending your medical information to your doctor. And because it wasn’t a critical means of communication, would-be eavesdroppers would rather tap your phone than tap your Internet.

Netscape, recognizing the need for security on the web to facilitate e-commerce, invented SSL (the predecessor to TLS). At that time, SSL was computationally expensive and algorithmically unproven. Forcing everything to run over SSL then would have been impractical. Instead, two protocols were used, https for data exchanges which were critically sensitive, and http for everything else. It worked.

Since that time, the computational overhead of TLS has dropped by orders of magnitude, and the protocol has held up to algorithmic scrutiny. At the same time, the web has grown massively, evolving from a system designed for sharing of ideas into a system which carries our most sensitive and personal secrets. But despite that, the security of our communications has not changed much. Don’t get me wrong – it’s not all bad. The most critical information exchanged with our banks and online purchases is usually secured by using TLS, which is great. And on some sites, email is secure too. But the majority of web traffic is still sent insecurely, using HTTP.

Users have grown accustomed to security problems on the net which are far worse than the lack of protocol security, and the need for protection has been relatively low. But as our lives move from physical to digital, the desire of hackers, governments, and the otherwise unscrupulous grows massively too.

So, from a user’s perspective, instead of asking the question, why does SPDY require encryption and authentication, a better question would be to ask, why not?

What Does TLS Do?

TLS, which is most commonly used by users when they go to a site using a https:// URL, offers two basic features: server authentication and data encryption.

Server authentication is a mechanism where the client can know that a site is trusted to be who the site claims to be. For example, when you visit http://www.bankofamerica.com/, TLS uses a system of known trust points to validate that this site really is Bank of America, according to a service that your browser has chosen to trust because it complies with a detailed set of specific security practices. Yeah, it’s complex, but the complexity is hidden from the user.

Data encryption is what prevents others from reading the data that you send over the Internet.

The Argument For Maintained Insecurity

At this point, I think I’ve heard all the arguments against TLS. Some are valid, and some are not. But we can overcome all of them.

Invalid Argument #1: Certificates Are Costly And Too Hard to Deploy

Certificates are a critical element of trust for implementing server authentication within TLS. Any site wishing to use TLS must get a certificate so that they can prove ownership of a site. Verifying your identity is a manual, offline, and usually time consuming process. So typically the companies offering these service charge fees to do so.

The cost of a certificate used to be really high – even over $1000. But these days, certificates can be surprisingly cheap, sometimes less than $10 (or even for free). At this cost, it is insignificant.

Despite the low cost, maintaining a certificate on a website is difficult. Server administrators must take precautions to protect their identity, and getting the right keys and certificates to the right servers is time consuming.

But in the end, this is not an argument for the user. This is an argument for a website that wants to save a relatively small amount of money. User security is more important than that.

Invalid Argument #2: It’s Just a Youtube Video, We Don’t Need To Secure It

Some content is so public, that many people believe that it doesn’t need to be secured, and this is partially true. For example, I really don’t care if someone sees the traffic I sent to Facebook today, because I’m posting that data publicly on the web already. It’s obvious.

But the problem is that when we offer two modes of communication, one secure and one not secure, we punt the problem to the user: “Do I need to send this securely?†Even if the user did know the answer to that question, and most users don’t, what one user may consider public information may be very sensitive to another. The only way to solve the problem for everyone is to always send data securely so that users don’t need to disambiguate.

Invalid Argument #3: Schools and Families Can’t Filter With TLS

Earlier this year, Google enabled a new feature, Encrypted Web Search. This feature didn’t force all communications to use TLS, it simply allowed the users to choose it if they wanted to. Unfortunately, this feature rollout was matched with cries from schools that they could no longer enforce content filtering for their students, which they are required to do by law.

Side note: Its ironic that Google is often criticized by our governments for not being sensitive enough about privacy. However in this case, when Google rolled out a feature to enhance privacy, that same government complained that Google is preventing censorship of our children. But, that’s politics! 🙂

Seriously, schools have a legitimate need here. They want to protect children from adult material, and they have bought solutions to this problem from many vendors. But unfortunately, most of those solutions leverage the fact that the web is insecure to implement the filtering! If we encrypt the web, these filters no longer work, and that is a problem for schools. It should be noted as well that these solutions already break when kids at school use SSL proxies (such as this one, or this one, or this one). But the filters don’t have to be perfect, so these problems are overlooked. The filtering vendors are in a constant arms race with services which attempt to circumvent the filters.

I really do sympathize with schools, and nobody wants to levy a costly solution on our already cash strapped schools. But filtering can be solved even in the presence of encryption; it is just a little harder. Many solutions exist, and we need to move the filtering to be certificate aware.

It doesn’t make sense to sacrifice the security of the entire web so that we can continue to enable cheap filtering in schools today.

Invalid Argument #4: Debugging The Web Is More Difficult

A natural consequence of security is that you can’t read the communications between the clients and servers easily. For web developers, this can make it more difficult to find bugs. Yes, it’s true. Debugging is still possible, it is just harder. But developer laziness is not a good reason to keep the internet insecure.

Invalid Argument #5: Political Backlash

Some governments are pretty adamant about their need to filter the net, and they aggressively filter using complicated techniques. If the web attempts to move to an all-encrypted protocol, these governments may decide to ban the protocol altogether. Yeah, I guess that is true. At some point, the citizens might want to revolt. I’m lucky I don’t live there, but that’s not a good reason why other countries can’t choose privacy.

Invalid Argument #6: Existing Servers At Capacity Will Break

Some websites today are already running their servers at full capacity. If we add security to the protocol, which requires even 1% more server resources, these sites could get slow.

While this is probably a true statement, it’s really not the user’s problem. I don’t see this as a compelling reason to not improve the security of the web.

Invalid Argument #7: Features of Transparent Proxies

Because the web is insecure today, it is possible for network operators to install transparent proxies which inspect the insecure traffic and change it. Sometimes these proxies do good things, like scan for viruses and malware. But other times, these proxies do bad things, such as turn off compression.

From experience, we know that there are a lot of problems with transparent proxies. And these proxies are generally considered to be an obstacle for protocol evolution on the internet. For instance, HTTP’s pipelining is currently disabled in all browsers largely because proxies make it impossible to deploy.

Enabling security on the web does not prevent the anti-virus and anti-malware “good†proxies. It just means they have to be explicit. But turning on security does prevent the proxies which intentionally or inadvertently break the protocol.

Ok – so that is the list of what I consider invalid arguments. But there are a couple of valid arguments too.

Valid Argument #1: TLS is Too Slow!

It’s true. TLS is too slow. This is in part because TLS is used so rarely that nobody has bothered to optimize it, and in part because it is difficult to change. The net result is that secure communications are slower than insecure communications. This will probably always be true, but doesn’t mean we can’t solve the problem enough to make it insignificant.

To understand the problem, we need to recognize there are 4 parts to TLS performance:

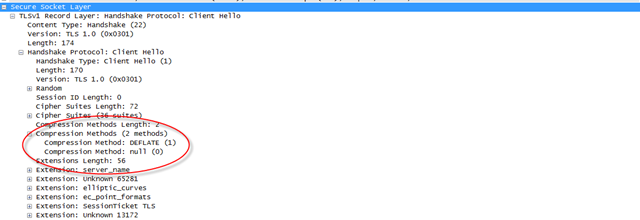

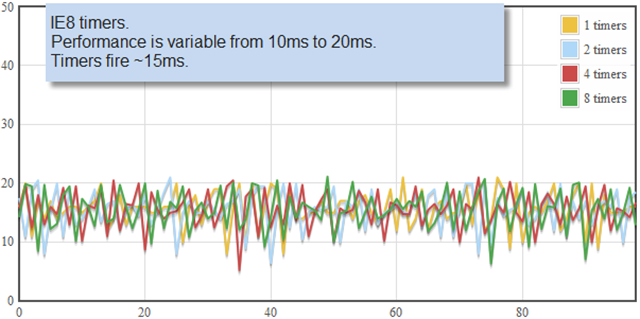

a) The round-trip-times of the TLS authentication handshake

b) The CPU cost of the PKI operations during the handshake

c) The additional CPU to encrypt data being transferred

d) The additional bandwidth due to TLS record overhead

a) The additional round-trips of TLS are real and need to be addressed. Today, the first connection to a site requires 2 round-trips for server authentication, and subsequent connections require 1 round-trip. Adam Langley has proposed a protocol change which reduces these to 0 round-trips (more details here). So for the sites you visit regularly, TLS will no longer incur any extra round trips.

b) The cost of PKI operations these days is super cheap. While these complex math computations took hundreds of milliseconds in the mid-1990’s, faster processor speeds make them take nanoseconds today. With optional hardware offloading, this is just not a problem.

c) Many people assume that bulk-data encryption is expensive. This is not true; the most common bulk encryption used with TLS today is the RC-4 cipher. RC-4 is extremely cheap computationally, and we’ve measured it has no additional latency costs when compared to the NULL cipher. Other encryption algorithms can have small costs (such as AES), but they’re still quite fast and you don’t have to use them.

d) We’ve measured SSL using ~3% more bandwidth than non-SSL. That is a relatively low overhead number, but it does add a non-trivial amount of latency. For a 320KB web page, 3% more data can add 30-80ms to the web page load time. Fortunately, bandwidth speeds continue to rise, and because of this, we don’t see the 3% overhead as excessive.

Overall, we believe (b) and (c) are non problems. We believe we can solve most of (a), and that (d) is an acceptable cost, mitigated by bandwidth improvements over time.

Valid Argument #2: Caching Servers Don’t Work With TLS

One of the most complicated parts of web communications today is communicating with proxy servers. Proxies have many purposes, but one of them is to cache content closer to the client to reduce latency. For example, if two users in Australia are both communicating to the same server in London, it reduces latency for the second user if he can use a cached copy of the content that the first user already downloaded. Unfortunately, when all traffic is private, the proxy servers don’t know what data is being sent, and so they can’t cache it for other users. This can make the user’s bandwidth costs go up and also increase his page load times.

But the use of proxy servers over the past few years has eased as the age of the Content-Delivery-Network has grown. Content Delivery Networks (CDNs) are a mechanism where websites can distribute content to a server which is proximal to the user regardless of whether the user has set up a proxy or not. CDN usage today is extremely common and growing. Almost all of the top-100 websites use CDNs to offer performance which is even better than proxy servers. Speculatively, we could guess that in the future all content will be delivered through CDNs as more and more sites move to hosted cloud computing platforms which will have the infrastructure of a CDN.

Given the use of CDNs and the decentralized use of proxy servers, it is hard to quantify the benefit of proxy servers today. We know that the majority of Internet users do not use caching proxies at all. But we also know that for some users they are very useful. And if we move to all secure communications, those users will no longer have caching proxies. I don’t have a good answer for this problem, except to say that I don’t think it is a huge problem, and that it is mitigated by CDNs. But I confess I lack data as well.

Conclusion

Changing protocols is not something we do very often. TCP/IP are over 30 years old, despite attempts to replace them. As such, the opportunity to improve network security does not come up often, and is not one to be overlooked. SPDY is a unique opportunity to improve not just HTTP, but also the fundamental underpinnings of how we communicate.

From the user’s perspective, the only argument against TLS is performance, which we believe we are actively solving. The other arguments don’t help the users – they help server and IT administrators. As we look to build the next generation Internet protocols, should we be looking out for the user or the administrator? SPDY wants to make sure the user is safe and in control.

It would be easier to make SPDY successful without TLS. Biting off security in addition to performance is a huge task that almost seems counter productive. But, lack of security is such a gaping hole in today’s Internet infrastructure, that we just don’t feel it can be overlooked. If security does prove to be an insurmountable task, we can always fall back to the same-old-same-old insecure plan. That is easy. But pushing things forward? When will we ever get that opportunity again? Maybe never.

It is sad that network traffic today is insecure. It will be absolutely tragic if network traffic in the year 2020 is still insecure.