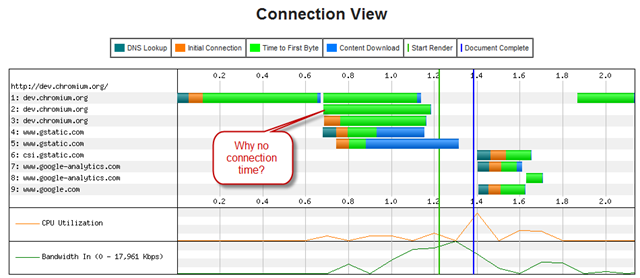

I was playing around on WebSiteTest today – trying out it new IE9 test feature, and I noticed something new that IE9 does: preconnect.

What is preconnect? Preconnect is making a connection to a site before you have a request to use that connection for. The browser may have an inkling that it will need the connection, but if you don’t have a request in hand yet, it is a speculative request, and therefore a preconnect.

IE9 isn’t the first to use preconnect, of course. Chrome has been doing preconnect since ~Chrome7. So it is nice to see other browsers validating our work. But, IE9 is the first browser I know of which appears to preconnect right out of the gate, without any data about a site. Chrome, on the other hand, will only preconnect based on data it has learned by observing network activity through repeat visits to a site. As such, Chrome usually is issuing the same number of connects and network traffic, just with less delay.

Observations

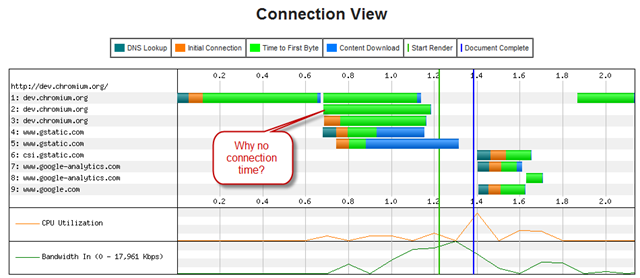

Here is the trace where I first noticed this behavior on WebPageTest. Notice that WebPageTest did not record any connect-time delay on the second request to dev.chromium.org. How can this be? Because the socket was already connected.

To understand this better, I then opened up WireShark and watched the packets. The packet trace clearly shows that IE9 simply opens 2 connections, back to back, for every domain the browser connects to. This isn’t a horrible assumption for the browser to make – since many sites will indeed require more than one connection per domain already.

Some Wastefulness

But it also wasn’t hard to notice cases where it connects wastefully. On belshe.com, for instance, there is a single link to a YouTube video requiring only one resource. IE9 opens two connections to YouTube anyway (WebPageTest doesn’t show the unused connection in its trace, by the way, but it is in the trace!). One connection loads the image, the other connection is wasted. YouTube diligently kept that connection open for 4 minutes too! There are also a couple of 408 error responses from Akamai – it appears that the Akamai server will send a graceful 408 error response to an empty connection after some period of time.

But is this a problem?

As long as the level of accidental connects is minimal, probably not. And much of the time, 2 connections are useful. It would be great to hear from the IE9 team about their exact algorithm and to see if they have data as to how much extra resources they are using?

WebPageTest already offers some clues. For belshe.com, for example, I can see that IE8 uses 20 connections, while IE9 is now using 23 connections to render the page. 10% overhead is probably not the end of the world.

What about SSL?

I love SSL, so of course this got me wondering about what IE9 does for preconnecting https sites too. Sure enough, IE9 happily preconnects SSL too. [Sadly – it even forces the server to do two full SSL handshakes- wastefully generating 2 session-ids. This is a bit more annoying, because that means the main site was just put through double the number of PKI operations. Fortunately, PKI operations are relatively cheap these days. I’d complain more, but, tragically, Chrome is not much better yet. Did I mention that SSL is the unoptimized frontier?]

What Would Brian Boitano Chrome Do?

As I mentioned, Chrome has been doing preconnect for some time. But, Chrome doesn’t preconnect right out of the gate. We were so worried about this over-connecting business that we added gloms of more complicated code highly sophisticated, artificial intelligence before turning it on at all 🙂

Specifically, Chrome learns the network topology as you use it. It learns that when you connect to www.cnn.com, you need 33 resources from i2.cdn.turner.com, 71 resources from i.cdn.turner.com, 5 resources from s0.2mdn.net, etc etc. Over time, if these patterns remain true, Chrome will use that data to initiate connections as soon as you start a page load. Because it is based on data, we hope and expect that it will much less often connect incorrectly. In fact, it should be making the same number of connections, just a little earlier than it otherwise would. But all of this is an area that is under active research and development. (By the way, if you want to see how Chrome works, check out the ultra-chic-but-uber-geek “about:dns†page in your Chrome browser)

So does all this fancy stuff make my Internet faster?

Fortunately, we have pretty good evidence that it does. We’re monitoring this all the time, and I’d say this work is still in its infancy. But here is some data from Chrome’s testing in 2010.

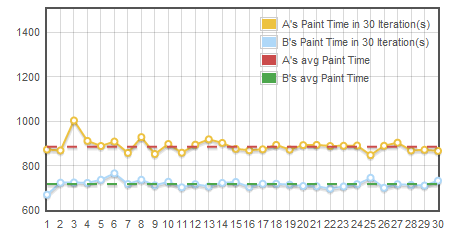

Our Chrome networking test lab has a fleet of client machines (running Linux), a simulated network using dummynet (see Web-Page-Replay for more information), and some fast, in-memory servers. We record content from the top-35 websites, and can play it back repeatedly with high fidelity. Then we can change the network configuration and browser configuration and see how it all works out.

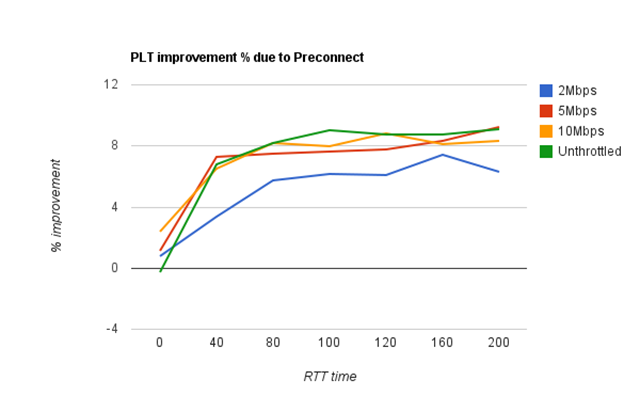

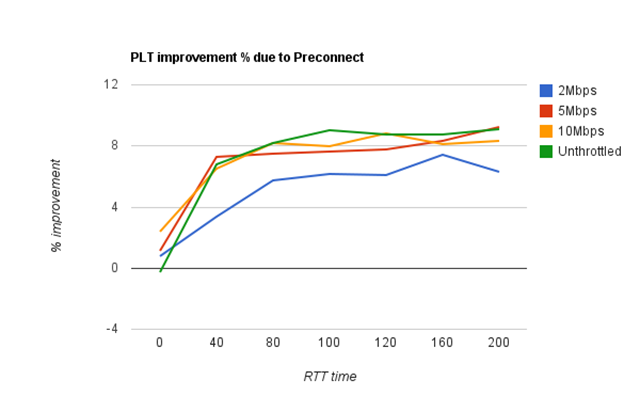

In my test, I picked 4 different network configurations. I then varied the RTT on each connection from 0 to 200ms.

Here is a graph of page load time (PLT) improvements in this test.

Overall, we’re pretty happy with this result. When the RTT is zero, preconnect doesn’t really help, because the connections are basically free (from a latency perspective). But for connections with RTTs greater than ~50ms, we see a solid 7-9% improvement across the board. (typical RTTs are 80-120ms)

The Larger Question

While this is great for performance now, I am worried about the wastefulness of HTTP on the Internet. We used to only have one domain per site, now we have 7. We used to have only 2 connections per domain, but now we have 6. And on top of that, now we’re preconnecting when we don’t even need to?

With it’s proliferation of TCP connections, HTTP has been systematically sidestepping all of TCP’s slow start and congestion controls. Sooner or later will the internet break? Throwing inefficiency at the problem can’t go on forever.

So one last note – a blatant plug for SPDY

The downside of preconnect is a big part of why we’re working on SPDY. HTTP has been nothing short of a heroic protocol and made the Internet as we know it possible. But as we look to the next generation of rich media sites with low latencies, it is clear that today’s HTTP can’t perform at that level.

SPDY hopes to solve much of HTTP’s connection problems while also providing better performance and better security.

With that, I guess I need to get back to work…