After my last article illustrated the length of our Certificate Chains, many people asked me “ok – well how do I get a small one?â€.

After my last article illustrated the length of our Certificate Chains, many people asked me “ok – well how do I get a small one?â€.

The obvious answer is to get your certificate signed as close to the root of a well-rooted Certificate Authority (CA) as possible. But that isn’t very helpful. To answer the question, lets look at a few of the problems and tradeoffs.

Problem #1: Most CA’s Won’t Sign At The Root

Most CA’s won’t sign from the root. Root CAs are key to our overall trust on the web, so simply having them online is a security risk. If the roots are hacked, it can send a shockwave through our circle of trust. As such, most CAs keep their root servers offline most of the time, and only bring them online occasionally (every few months) to sign for a subordinate CA in the chain. The real signing is most often done from the subordinate.

While this is already considered a ‘best practice’ for CAs, Microsoft’s Windows Root CA Program Requirements were just updated last month to require that leaf certificates are not signed directly at the root. From section F-2:

All root certificates distributed by the Program must be maintained in an offline state – that is, root certificates may not issue end-entity certificates of any kind, except as explicitly approved from Microsoft.

Unfortunately for latency, this is probably the right thing to do. So expecting a leaf certificate directly from the root is unreasonable. The best we can hope for is one level down.

Problem #2: “Works†is more important than “Fastâ€

Having your site be accessible to all of your customers is usually more important than being optimally fast. If you use a CA not trusted by 1% of your customers, are you willing to lose those customers because they can’t reach your site? Probably not.

To solve this, we wish that we could serve multiple certificates, and always present a certificate to the client which we know that specific will trust. (e.g. if an old Motorola Phone from 2005 needs a different CA, we could use a different certificate just for that client. But alas, SSL does not expose a user-agent as part of the handshake, so the server can’t do this. Again, hiding the user agent is important from a privacy and security point of view.

Because we want to reach all of our clients, and because we don’t know which client is connecting to us, we simply have to use a certificate chain which we know all clients will trust. And that leads us to either presenting a very long certificate chain, or only purchasing certificates from the oldest CAs.

I am sad that our SSL protocol gives the incumbent CAs an advantage over new ones. It is hard enough for a CA to get accepted by all the modern browsers. But how can a CA be taken seriously if it isn’t supported by 5-10% of the clients out there? Or if users are left with a higher-latency SSL handshake?

Problem #3: Multi-Rooting of CAs

We like to think of the CA trust list as well-formed tree where the roots are roots, and the non-roots are not roots. But, because the clients change their trust points over time, this is not the case. What is a root to one browser is not a root to another.

As an example, we can look at the certificate chain presented by www.skis.com. Poor skis.com has a certificate chain of 5733 bytes (4 pkts, 2 RTs), with the following certificates:

- skis.com: 2445 bytes

- Go Daddy Secure Certification Authority 1250 bytes

- Go Daddy Class 2 Certification Authority: 1279 bytes

- ValiCert Class 2 Policy Validation Authority: 747 bytes

In Firefox, Chrome and IE (see note below), the 3rd certificate in that chain (Go Daddy Class 2 Certification Authority) is already considered a trusted root. The server sent certificates 3 and 4, and the client didn’t even need them. Why? This is likely due to Problem #2 above. Some older clients may not consider Go Daddy a trusted root yet, and therefore, for compatibility, it is better to send all 4 certificates.

What Should Facebook Do?

Obviously I don’t know exactly what Facebook should do. They’re smart and they’ll figure it out. But FB’s large certificate chain suffers the same problem as the Skis.com site: they include a cert they usually don’t need in order to ensure that all users can access Facebook.

Recall that FB sends 3 certificates. The 3rd is already a trusted root in the popular browsers (DigiCert), so sending it is superfluous for most users. The DigiCert cert is signed by Entrust. I presume they send the DigiCert certificate (1094 bytes) because some older clients don’t have DigiCert as a trusted root, but they do have Entrust as a trusted root. I can only speculate.

Facebook might be better served to move to a more well-rooted vendor. This may not be cheap for them.

Aside: Potential SSL Protocol Improvements

If you’re interested in protocol changes, this investigation has already uncovered some potential improvements for SSL:

- Exposing some sort of minimal user-agent would help servers ensure that they can select an optimal certificate chain to each customer. Or, exposing some sort of optional “I trust CA root list #1234â€, would allow the server to select a good certificate chain without knowing anything about the browser, other than its root list. Of course, even this small amount of information does sacrifice some amount of privacy.

- The certificate chain is not compressed. It could be, and some of these certificates compress by 30-40%.

- If SNI were required (sadly still not supported on Windows XP), sites could avoid lengthy lists of subject names in their certificates. Since many sites separate their desktop and mobile web apps (e.g. www.google.com vs m.google.com), this may be a way to serve better certificates to mobile vs web clients.

Who Does My Browser Trust, Anyway?

All browsers use a “certificate store†which contains the list of trusted root CAs.

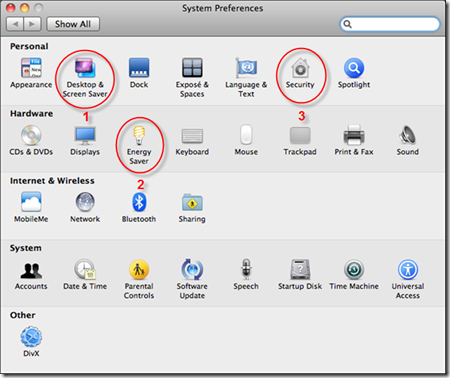

The certificate store can either be provided by the OS, or by the browser.

On Windows, Chrome and IE use the operating-system provided certificate store. So they have the same points of trust. However, this means that the trust list is governed by the OS vendor, not the browser. I’m not sure how often this list is updated for Windows XP, which is still used by 50% of the world’s internet users.

On Mac, Chrome and Safari use the operating system provided store.

On Linux, there is no operating system provided certificate store, so each browser maintains its own certificate store, with its own set of roots.

Firefox, on all platforms (I believe, I might be wrong on this) uses its own certificate store, independent of the operating system store.

Finally, on mobile devices, everyone has their own certificate store. I’d hate to guess at how many there are or how often they are updated.

Complicated, isn’t it?

Yeah Yeah, but Where Do I Get The Best Certificate?

If you read this far, you probably realize I can’t really tell you. It depends on who your target customers are, and how many obscure, older devices you need to support.

From talking to others who are far more knowledgeable on this topic than I, it seems like you might have the best luck with either Equifax or Verisign. Using the most common CAs will have the side benefit that the browser may have cached the OCSP responses for any intermediate CAs in the chain already. This is probably a small point, though.

Some of the readers of this thread pointed me at what appears to be the smallest, well-rooted certificate chain I’ve seen. https://api-secure.recaptcha.net has a certificate signed directly at the root by Equifax. The total size is 871 bytes. I don’t know how or if you can get this yourself. You probably can’t.

Finally, Does This Really Matter?

SSL has two forms of handshakes:

- Full Handshake

- Session Resumption Handshake

All of this certificate transfer, OCSP and CRL verification only applies to the Full Handshake. Further, OCSP and CRL responses are cacheable, and are persisted to disk (at least with the Windows Certificate Store they are).

So, how often do clients do a full handshake, receiving the entire certificate chain from the server? I don’t have perfect numbers to cite here, and it will vary depending on how frequently your customers return to your site. But there is evidence that this is as high as 40-50% of the time. Of course, the browser bug mentioned in the prior article affects these statistics (6 concurrent connections, each doing full handshakes).

And how often do clients need to verify the full certificate chain? This appears to be substantially less, thanks to the disk caching. Our current estimates are less than 5% of SSL handshakes do OCSP checks, but we’re working to gather more precise measurements.

In all honesty, there are probably more important things for your site to optimize. This is a lot of protocol gobbledygook.

Thank you to agl, wtc, jar, and others who provided great insights into this topic.